Improving 3D reconstruction

The idea of the previous meeting was to use more viewpoints, but the current “solution” is not robust enough. So, I worked more on studying ways to improve the robustness of each pipeline step.

Quick concept recap

Knowing the fundamental matrix (obtained by knowing at least 8 matching points between 2 images), we can map a point of the first image to a line of the second image. The correspondent point, if present in the image, will be somewhere in that line.

I started by trying to improve the “dense matching” algorithm since the matching algorithm in the 1D search is very simplistic and not robust enough. So, I started adapting the code to be compatible with feature-detection algorithms, which I believe will give much better results but take much more time to compute.

This leads to two options:

- Send the ROI of the image for the 1D search: I could not figure out if the feature-detection algorithms ignore the black region or not, and that's the reason why there is an option 2

.jpg)

- The second option is more tricky. It involves sending a rectangular image to the feature-detection algorithm to ensure that only the ROI is analyzed. To achieve that, the first step is to rotate the image with the angle of inclination of the epipolar line resulting on the image of the window “Rotated image”; This way, the ROI is a rectangular section of the image, like in the window “Cropped to the window height”, removing the black regions. I have some doubts about this method, starting with the fact that the epipolar line is not well defined (the thickness of the line is not 1 pixel—window “Section of the cropped image”). Worse than that, I don’t know if applying the feature-detection algorithm to the entire image with a very high number of keypoints and then applying the ROI only to the feature-match algorithm won't give similar results with less computational cost.

.png)

Eventually, I put these studies on hold and studied ways to find the common region between the two viewpoints. This will help in the future to solve the occlusions problem. ChatGPT and Bing chat gave me the following directions:

- “Traditional” approaches:

- Estimate homography using feature detection and matching algorithms and use the homography to warp one image onto the other and find common regions. Here is an illustration of warping operation:

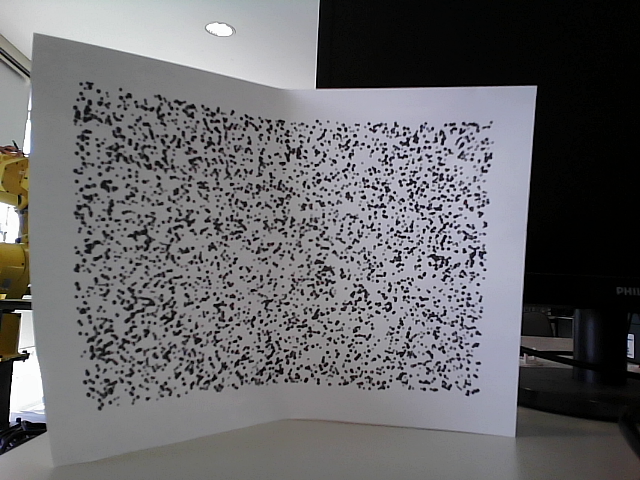

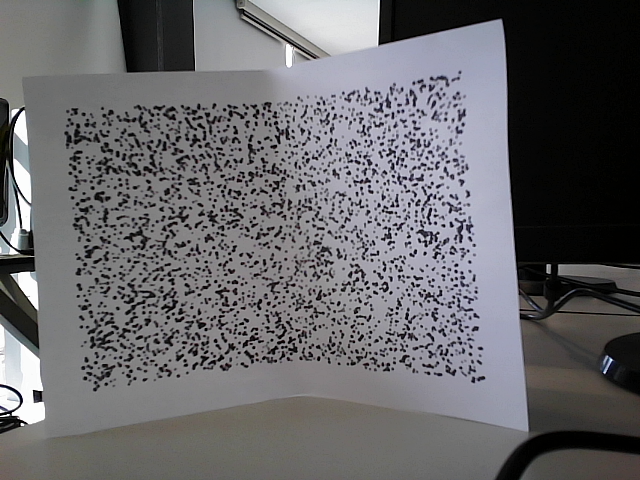

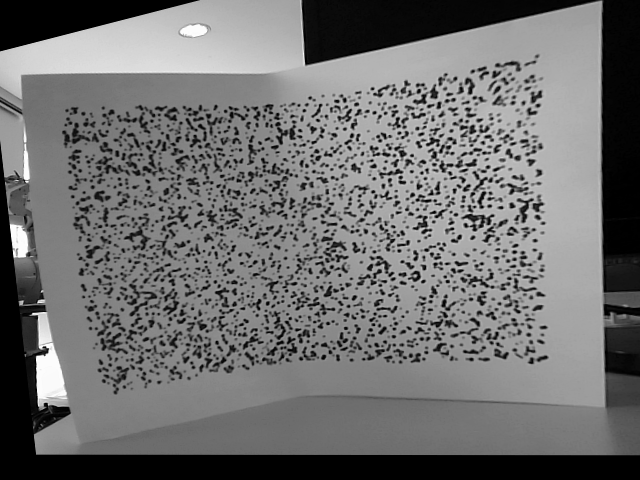

Image 1:Image 2: Aligned image 1:

Aligned image 1: The chat only suggests doing a bit-wise operation to find the common region, but that doesn’t make sense since the background is totally different. I kept this here because the object (a sheet of paper) gets a similar shape between the two images, and I could use that to identify and isolate the object.

The chat only suggests doing a bit-wise operation to find the common region, but that doesn’t make sense since the background is totally different. I kept this here because the object (a sheet of paper) gets a similar shape between the two images, and I could use that to identify and isolate the object.

- Block Comparison: Basically, divide the image into small blocks and compare each block of image 1 to every block of image 2 and save the blocks with similarity bigger than a threshold

- Estimate homography using feature detection and matching algorithms and use the homography to warp one image onto the other and find common regions. Here is an illustration of warping operation:

- Machine learning: From the two chats, the suggestions were: Siamese networks, collaborative segmentation, stereo matching networks, image registration networks, and deep image matching. I didn’t explore these fields because I felt that I needed a deeper understanding of machine learning, and I started taking an online course on the subject.

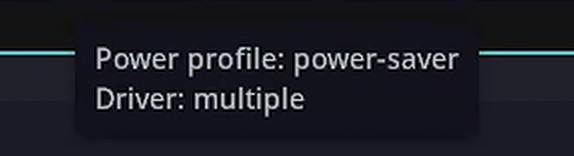

During these studies, I had a problem with my computer: I neglected the updates and started not being able to install anything. I formatted the computer and took the opportunity to start working only on docker environments to prevent losing the dependencies of the code that I have in the event of having to format the computer again. When I tested the code, it was much slower in the C++ part. After building from source different versions of OpenCV, GCC an G++ I finally found the issue 🤦♂️:

Enjoy Reading This Article?

Here are some more articles you might like to read next: